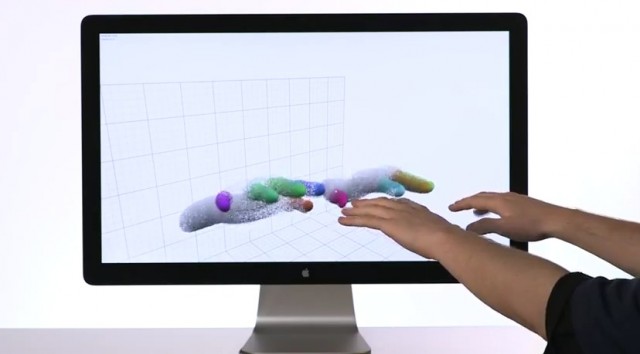

Developers have announced the Leap – a new $70 motion sensor that they say is ‘two hundred times more accurate than any product currently on the market.”

Like the Microsoft Kinect, the Leap is designed to translate your gestures and movement into computer control. But the developers suggest that the Kinect is a toy, compared to the Leap:

This isn’t a game system that roughly maps your hand movements.

The Leap technology is 200 times more accurate than anything else on the market — at any price point. Just about the size of a flash drive, the Leap can distinguish your individual fingers and track your movements down to a 1/100th of a millimeter.

Here’s a video introduction for the Leap:

http://www.youtube.com/watch?v=1RIh6GdRYjY

Seeing this immediately got us thinking about possible music applications.

There has been some developer interest in the Kinect. But we’ve previously noted several important limitations of the Kinect for music applications:

Using a limited resolution sensor (640 x 480 pixel) with a relatively slow sampling rate (30 fps) means that the Kinect captures a fraction of the information that an older, simpler and cheaper “touchless” analog technology – the theremin – is capable of.

The limited resolution means that there’s a practical limit to the number of steps of control that the Kinect can afford.

More critical for musicians, though, is the Kinect’s latency. The frame rate alone of the Kinect is going to create 35-40 milliseconds of latency, In practice, though, the latency appears to be much higher, closer to 1/10th of a second.

These limitations and others have damped interest in the development of Kinect-based music hacks.

Comparing the Kinect to the Leap isn’t an exact apples to apples comparison, because the Leap is tailored to discrete hand gestures, while the Kinect is designed for broader full-body motion.

But the Leap is cheaper, much more accurate and looks like it will offer improved latency over the Kinect. And this should make the Leap better suited to general purpose music hacking. In fact, Leap Motion is looking for interested developers.

Check out the video demo and let us know what you think. Could the Leap succeed where the Kinect has failed and become a new tool for music making?

Kinect is waste of time, money, plastic and developement time, and its greatest asset is the marketing bonanza behind it. It was supposed to be interesteing input method for games, but they exchanged its usability to bigger profit margins by leaving a dedicated signal processor out of it in the last minute. Its not made for music applications in mind, but the input latency is so high, that it even limits the game play design and thus Kinects games are simple, very small and full of automation.

And whats worse, now they are stuck with it. Do they show their arse to their customers by leaving the Kinect out of support in next generation, or do they continue to waste money in it?

Minority report, ARE YOU FINALLY HERE MINORITY REPORT?!?!?!

Gimmick aside, I don’t think anyone will care about this.

>the Leap can distinguish your individual fingers and track your movements down to a 1/100th of a millimeter

>>I don’t think anyone will care about this

One reason touch screens are good for corporations is that they have fewer moving parts than keyboards, and simply fewer parts overall–they’re easier to fabricate and build into products and they don’t break in the ways keys break or fall off. The Leap is even simpler (from a build/design view) than a touch screen. So for the exact same reasons corporations prefer touch screens over keyboards, corporations will like the Leap if it works. And if some little corporation somewhere gets some buzz and makes a little money with Leap, then Apple will build it into a computer and EVERYONE will care about it and LOVE it and DESIRE it and it will become the future.

I so agree with you.

I will take a leap of faith (hehe) and order one.

My thoughts exactly! Separate it would be a cool, useful gadget. Incorporated it could be the next touch screen. I don’t think it will replace touch screens.. At least not for some time, but it would replace the mouse or annoying hard to control mouse pad or what it’s called.

It would be great for designers and musicians. Imagine full control over synths real time… The mouse just can’t do that…

Good thing that the developers seem to be smart people. One of them from NASA. Might prove to be a very nice little gadget. And the price! Neat.

Using the Kinect you just can’t get the accuracy especially when tracking body parts position in relation to eachother. To do any form of music other than soft pad control then you need better than the minimal 640×480 resolution and 30 frames per second. The kinect also uses a drastic amount of CPU and so requires separate music and controller machines unless you use a later quad-core machine. Very keen to see how this goes.

If developers jump on board en masse, it could be great. It is as natural an evolution as keyboard only was to mouse. It would be nice if they made it cross platform to ios also. I can think of several controller solutions that could be had that would benefit from being away from the computer, utilizing gestures. I just want it to someday speak like Jarvis also.

“I don’t think anyone will care about this.”

The porn industry is going to be all over this like some crazy rash..

Musically, it would be really a great add on for performances no doubt..

In order for this to hit big, we have to get over our need to have something contact the end of our fingers, keyboards & touch screens have that physical contact that motion sensing products don’t. and to move your hand accurately in a 3d space without a physical response of manipulation is a skill that will have to be practiced heavlly by the user… I can get theremins to only sound like a dying cat without pitch correction, because my hand coordination in that space is not precise enough. and like a theremin it’s gotta take a lot of thought to get the movements and positions perfect, because there is a different kind of muscle memory involved

Flame away…

>I can get theremins to only sound like a dying cat without pitch correction, because my hand coordination in that space is not precise

I know what you mean, but designers can be creative. For instance, if it can sense your finger positions, nothing stops a designer from putting in a guide rail carefully etched, maybe two, and the Leap would sense your finger moving along the etched positions of the guide rails. Or a screen can give visual feedback by color or something as your fingers move within note parameters so you keep your fingers in position by subconsciously matching some visual cue. Theremins are always built (the ones I’ve seen) as basic technological building blocks with no real thought given to providing hand guidance and feedback (other than sound) to the operator. If someone takes a Leap and isn’t restricted by budget or design principles from being redundant–if they can provide many different forms of related feedback to the user–I bet the device could be great.

Well, I’ve just pre-ordered mine. I’ve been interested in gestural interfaces for a while, but the only thing stopping me from getting really into programming it is making hardware that will work. When they ship around december/january, I’ll be at uni doing music technology. There’s a lot of max/msp stuff on the course, and I feel this could be useful.

It’s just a matter of finding out what it’s good for – a computer mouse can’t do 3 axes of control with recognition of what fingers you’re using, but then if you put a mouse still on the table the cursor doesn’t move….

>a computer mouse can’t do 3 axes of control

Actually, this is one of the areas where this type of UI completely fails. Do a little exercise and try mapping out a control scheme using a touch-less control system for a program like Maya or 3DS Max. You won’t get more than 5 minutes into it before you realize that it’s a completely useless interface approach for complex 3D related tasks. Even a touch screen is noticeably inferior to a mouse and keyboard when it comes to precision and speed. Don’t let those cheesy demos fool you where someone pulls out floors on a building and rotates it around. That was all programmed to look cool when using a couple very crude hand gestures.

I think it looks great, imagine playing a virtual instrument with it like you would on a keyboard

So what if apple buys this technology and built it in the next iphone ipad ? everyone will apreciate it

This makes more sense to me than multitouch for big screens. Do you want to have smudges all over a big screen?

I’d like to see this with Live’s Session View. If you could scroll around the screen with hand gestures. It would be easier to know where you are than with something like the Launchpad. Plus, you wouldn’t need to look back and forth from a controller to the screen.

Yes, waving with hands in the air is for sure the most optimal for your workflow. I can allready feel the pain after 8h work with computer and this device (WORK, not gaming).

Must have missed the part where it said it had the power to make all keyboards and mice dissapear…

The point of this isn’t to type or mouse around by waving your hands in the air, but to interact with your computer in ways that a keyboard and mouse don’t let you.

The video has vanished, when I finally have a chance to look at it….

Video is still on the Leap site. Looks like it has potential, though it does have a slight feeling of vapour about it. Looks a little too good etc, but that has happened before and things appeared in real life.

Drums, autoatmaition, programming etc – mouse.

Sends and returns,

Delays,

Granular synthesis,

filters,

reverbs anything that can be given a natural flow – hook it up to this – endless possibilities, more free, you can an X Y Z axis, i.e. 3 parameters mapped to your hads, giving you mor dynamics.

Of course these are basic primitive ideas, but a step forward – endless possibilities. I dont see it as a replacement, yet as a great addition !

We could have had “wave your hands around” computer UI a decade ago, but it doesn’t work well for users and is actually hugely limiting in it’s potential. You can do much, much more with a mouse and keyboard, or physical touch screen, and be far more precise. Touchless motion control is crude and limiting at best, no matter how much resolution the system has.

It doesn’t matter how cheap or how bigh-resolution the sensors get, this form of UI simply doesn’t offer more than we already have, and so will never be more than a curiosity.

But that’s like saying there’s no room for Kinect, as a joypad is more accurate. Or Smart TVs are pointless because the remote is much more tactile. It’s pretty plain to me the incredible potential this can add to making software become a much more fluid and attached experience. I still find it a joy using multitouch on OSX, my pc laptop os feels like a relic. Imagine using gestures to flick between editing windows, mixers, tweaking the odd setting, flowing about your arrange window, trigger transport functions.

And totally shit the bed, like someone above mentioned, imagine a 3 axis granular synthesis controller, soundwarp and Curtis on the iPhone are impressive and unique as it is.

having been one of the first people to buy a mac so so many years ago – i distinctly remember clients telling me that the mouse was a waste of time. A gimmick

I have preordered just to get a glimpse of what the future may hold