New York-based sound designer Matt McCorkle created Weather Machine – a weather data sonification experiment.

New York-based sound designer Matt McCorkle created Weather Machine – a weather data sonification experiment.

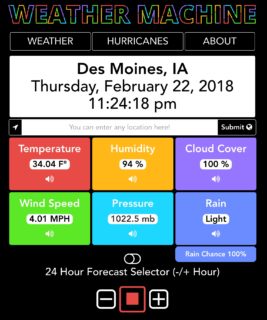

The project translates weather data from The Dark Sky API into soundscapes based on local weather conditions and historical climate data.

The soundscape can be tweaked, by selecting the location of the forecast, timeframe and by enabling/disabling different facets of the forecast.

“The goal of this web app is to explore the use of sound to express data that affects our everyday lives,” says McCorkle. “Additionally, to pose the question: ‘Can data sonification be used to help people better understand their weather and our changing climate?'”

I had this idea decades ago about taking various data sets/streams, like tides, atmospheric pressure over time, temperature, humidity, etc. etc. and scaling them so that they are pushed into the audio range.

For example, if chose temperature, you’d have a year be a single cycle (one year would be, say a 100 Hz tone), and then the daily up down of temperature would be an overtone.

With tides/water/level, you could have waves be the fundamental frequency, or you could have daily tides, or lunar cycles. If it wasn’t organized enough to be a tone, it would certainly be a fascinating “noise” to hear.

A high resolution positioning device could be placed on a skyscraper that measures it’s sway and that data could be pitched up so you’d hear that building being “bowed” by the wind.

All you need is data. It’d be fun to hear.

I further suggest that there are things we could learn from hearing those things adapted to the audio range that might be surprising and/or useful.

Now imagine making ambient works from your Fitbit data.